EMMECHE, C., 1996, The Garden in he Machine, The Emerging Science of Artificial Life

LEVY, S., 1993, Artificial Life, A Report from the Frontier where Computers meet Biology

Remark : This Essay presupposes many results from all the forgoing Essays.

Why an Essay about Artificial life?

This website is devoted to Ontology, the study of Being, with emphasize on beings. It is meant to be a general theory about some principles of being, especially the generating principles. We want to investigate where those generating principles (which we can denote as the Essences of the beings in question) reside and assess their reality status and their relation with all the other aspects of the beings in question, and in what way and how far these principles determine the holistic- or totality-nature of those beings giving rise to their individuality. Such beings are for example crystals, and especially organisms. The latter are in a way prototypic examples of holistic beings, culminating in man, having selfconsciousness, and so experiencing his individual ONE-ness.

And because the theory should be general and universal, we cannot confine ourselves to the study of life which we encounter just on this planet, because it seems, in contrast with molecules and especially crystals, that life as we know it is not the only possible form of life. While a material life, based on chemistry, is hardly possible with other main materials (other than Carbon compounds), a totally different kind of life maybe possible, for instance life within a computer environment. So in order to be universal we must also study these alternative life-forms. And experience tells us that when we plunge into the problems of Artificial Life, then we are automatically also engaged in deep questions about life-as-we-know-it, especially its ontology.

There are several forms of Artificial Life, like for instance attempts to create life from a soup of chemicals, but in this Essay we will confine ourselves to computer-based Artificial Life (a-life).

On the one hand the relevant computer-based creations can be just simulations of (some aspects of) real life, i.e. the form of material carbon-based life we encounter on Earth. Such simulations are meant to increase our insight in life-as-we-know-it.

On the other hand the relevant computer-based creations are meant to BE a life-form themselves. This is Artificial Life in its strict (" strong ") sense.

Of course at present there still does not exist any computer-based creation that is unanimously ackknowledged as being alife. What is accomplished is just the computer-based creation of some aspects which are believed to belong to one form of life or another. Most of these aspects are organic functions and behavior, not organic form, i.e. morphology (L-systems are an important exception). But of course it could be that computer-based life consists mainly of function and behavior and less of explicit morphological structure. Behavior in the real material world is made possible by morphological structure. In computer-based life, behavior is made possible by imposing formal Rules on simple morphological structures. The creatures, presently crafted or evolved within computers are, as has been said, not yet alife, but can be considered as computer-based proto-organisms, comparable with the real creatures that inhabited the Earth immediately before the advent of life : they subsequently evolved into real living organisms.

In order to assess whether computer creatures are really alife we need, it seems, some criteria for something to be alife, in short we need a definition of life. But such a definition is not yet within reach. It moreover seems that there cannot be such a definition. Life should be gauged on a continuum, and not granted according to a binary decision. Things can be more or less alife. Life is a property showing degrees. A most important general condition for aliveness is the existence of complex systems, as a key component in biological entities. A complex system is one whose component parts interact with sufficient intricacy that they cannot be predicted by standard linear equations. So many variables are at work in the system that its overall behavior can only be understood as an emergent consequence of the holistic sum of all the myriad behaviors embedded within (LEVY, p. 7/8). What, within all the uncertainty about the nature of life, is generally accepted is that life does NOT possess some supernatural component.

To get some flavor of Artificial Life I will cite a part of the opening page of LEVY's book Artificial Life :

" The creatures cruise silently, skimming the surface of their world with the elegance of ice skaters. They move at varying speeds, some with the variegated cadence of vacillation, others with what surely must be firm purpose. Their bodies -- flecks of colors that resemble paper airplanes or pointed confetti -- betray their needs. Green ones are hungry. Blue ones seek mates. Red ones want to fight.

They see. A so-called neural network bestows on them vision, and they can perceive the colors of their neighbors and something of the world around them. They know something about their own internal states and can sense fatigue. They learn. Experience teaches them what might make them feel better or what might relieve a pressing need.

They reproduce. Two of them will mate, their genes will merge, and the combination determines the characteristics of the offspring. Over a period of generations, the mechanics of natural selection assert themselves, and fitter creatures roam the landscape.

They die, and sometimes before their bodies decay, others of their ilk devour the corpses.In certain areas, at certain times, cannibal cults arise in which this behavior is the norm. The carcasses are nourishing, but not as much as the food that can be serendipitously discovered on the terrain.

The name of this ecosystem is PolyWorld, and it is located in the chips and disk drives of a Silicon Graphics Iris Workstation. The sole creator of this realm is a researcher named Larry Yaeger, who works for Apple Computer. It is a world inside a computer, whose inhabitants are, in effect, made of mathematics. The creatures have digital DNA. Some of these creatures are more fit than others, and those are the ones who eventually reproduce, forging a path that eventually leads to several sorts of organisms who successfully exploit the peccadilloes of PolyWorld."

Figure 2. The creatures of PolyWorld have evolved from random genomes : Over generations they become skilled at seeking food (green patches), reproducing, and fighting.

( After LEVY, 1992, Artificial Life )

PolyWorld is a typical example of an Artificial Life effort. The possibility to evolve in an evolutionary sense is considered crucial. And clearly we see the emphasis on behavior, and the absence of almost any individual morphology. It is, it seems, a consequence of computer-based life.

Artificial Life tries to uncover which properties of life-as-we-know-it are typical for just this kind of life, and which properties are universal for living beings, properties, that must be possessed by every kind of life. On the latter properties we will focus our discussion and will guarantee that our ontological interpretation will be general. For us it is important that Artificial Life starts with very simple elements that interact with each other according to explicit rules (dynamical laws), and this will give us a means to assess the general nature of ultimate dynamical laws governing each organism including real ones. As outlined in a number of essays in this website such an ultimate dynamical law, governing the morphology and behavior of an organism can be interpreted as the prime ontological principle of that organism, its Essence.

A-life tries to establish dynamical laws like L1, that, when implemented on a substrate, generates an organism.

A-life generally uses the computer as a substrate. It must provide the computer-entities (screen-pixels say) with the relevant properties, but this can only be done by associating them with the dynamical law, which is by necessity transcendent with respect to these entities (in contradistinction with real systems). Now these entities interact according to that transcendent law and in doing so we could perhaps -- from that point onwards -- interpret that law as immanent. The substrate, which is per accidens with respect to the law, IS (identical with) the rest of the relevant computer-hardware. It depends on the very character of the dynamical law whether we will assess (i.e. evaluate) the generated Totality (Totalities) as living. The dynamical law itself implies only a sequence of logical steps, but if this law is implemented in hardware (its substrate) then we will obtain a material process.

To explain the concept of the Ultimate Dynamical Law of a particular organism (or whatever Totality) once more, I can state the following :

Actual, Contingent History of the organic Totality

When we trace back the actual ontogenetic and evolutionary (phylogenetic) history of a particular organism, we obtain a succession of material states, leading from the organism to a certain collection of atoms and simple chemicals of the primordial atmosphere. Of course the real succession (the succession that actually took place) goes the other way around, leading from a certain collection of atoms and simple molecules formerly present in the primordial atmosphere to that completely finished organism under investigation. This history still reaches a little more back in time, because we also have to trace back the formation of the mentioned simple molecules from their constituent atoms, which does not have happened in a direct way, straight from the constituent atoms to such a molecule.

This (total) history constitutes the actual history of that particular organism, i.e. the actual historical process of the formation of that organism from a collection of atoms, which can be considered as the ultimate constituents of that organism (See NOTE 1 ).

This actual history consists of a long series of successive atomic configurations (i.e. patterns of atoms, representing molecules and clusters of molecules). And because every non-random series of successive patterns must in principle be describable as a succession of states of a dynamical system, we have to do with a dynamical law, governing the atomic and molecular interactions, leading to the formation of that particular organism.

Is this law then the Essence of that organism?

No, it isn't.

Virtual non-contingent History of the organic Totality

The above described law, governing the actual historical sequence of atomic and molecular patterns, leading to the actual formation of that particular organism, is full of historical contingencies, at least up to the formation of the fertilized egg-cell of that particular organism in question (The history, from that egg-cell to the adult organism does probably not contain any contingent elements).

From the perspective of that organism its historical formation (from atoms) made a detour. Its formation -- as actually happened -- was very indirect, because the whole actual (evolutionary) process consists of trials and errors. Its history is littered with random mutations in the genetic systems, and selection took place on the basis of survival-value with respect to an ever changing environment. Consequently many aspects of this actual history are per accidens with respect to that organism's intrinsic atomic and molecular pattern, i.e. to that organism's actual structure (and its behavior that these structures make possible). And because Essence is something per se (with respect to that organism, i.e. its Essence is all that what necessarily belongs to it), and consequently opposed to anything that is per accidens, this law, governing the actual historical succession of states, leading from atoms to that organism's final structure, CANNOT as such be the Essence of that organism.

What then is the Essence?

The Essence of that particular organism is the dynamical law which remains after subtraction of ALL the contingent elements (i.e. all the per accidens elements) of the actual formational history. So we now consider a non-contingent shortcut of the actual history. This shortcut is a succession of states leading from a relevant collection of atoms, directly to the organism. This shortcut-history must of course be physically and chemically possible, that's why still a long succession of states is necessary (in contrast with, say, a process of the formation of the organism from a collection of atoms in ONE step (or only a few steps)). This shortcut would be actually obtained when we, as experimenters, are able to synthesize the organism directly from a collection of atoms. During this synthesizing in the laboratory there are no historical contingencies, at least not in a final synthesizing method. Of course we, experimenters, design such a synthesis, but that means that we only allow certain conditions to prevail. The atoms and molecules themselves do all the synthesizing. Such a set-up is and must be comparable with the experiments conducted by Stanley Miller, and by successive researchers inspired by him, to synthesize organic building-blocks (nucleotides, aminoacids, etc.) under certain conditions. The synthesizing of that organism could be interpreted as a form of artificial life, but in fact it is only a repetition of the formation of that organism, be it in a direct way. What we normally understand by the term " Artificial Life " is the creation by man of life on a different basis than life as we know it.

So, the dynamical law, inherent in the sequence of states, a sequence leading directly from atoms to that organism, is the Essence of that organism. It concerns a physical and chemical feasable sequence of material states, i.e. it is physically and chemically realistic, but it is stripped from any (historical) contingency, and ' constructed ' (in real experiments, or thought-experiments) NOT (from the) top-down, but (from the) bottom-up, i.e. by self-organizing principles.

Artificial Life in computers concerns a collection of relatively simple elements, as an initial condition (initial state), and a relatively complex Rule that organizes those elements into very complicated dynamic configurations or patterns. Complicated behavior is then emergent and implies functional behavior, like flocking, foraging, reproduction, etc. This Rule, if it generates a certain complete set of behaviors (and, possibly, morphologies), is -- and must be -- comparable with the aforementioned Ultimate Dynamical Law of one or another really existing organism. In both cases the Rule (the Law) must be relatively complex, and consists of much informaion, because the elements (atoms, pixels) themselves contain only a moderate amound of information (in a collection of atoms in fact many many potential dynamical laws inhere, but that means that there is in fact little information present, because information consists in constraints). It all amounds to their way of combining with each other into patterns, and information means a determination toward one or a few such patterns, and this information must reside in the one dynamical law that leads to that pattern. And that in turn means the ' activation ' of certain special properties of the atoms.

The dynamical law must imply a suitable environment for sustaining the ongoing dynamics, i.e. that law is not demanding such an environment but brings it with it. Such an environment consists of (physical) matter and energy or their (computer-)equivalents. Besides this the dynamical law demands (and now : not implies) some external conditions or a succession of external conditions like temperature and pressure (or their computer-equivalents) to prevail in order to let the law operate.

In real organisms their Essence is inherent in the relevant (physical) matter, i.e. in some properties of the relevant elements. In order to conceptually isolate these properties (from other not relevant properties) we describe their collection as a dynamical law. This dynamical law is accordingly equivalent to a set of properties and can be interpreted as FORM. The remaining properties, that do not enter the domain of the dynamical law (i.e. that do not go into the constitution of that law), can be interpreted as the substrate or MATTER relative to that FORM. As has been said, FORM and MATTER are in this context only relative, not absolute. This means that the dynamical law is not ' just formal ' and the substrate ' just material'.

Artificial Life

In computer-generated artificial life a Rule is set up that is interpreted as the implication of, or, if one wishes to say, the equivalent to, certain boundary conditions. The physical set-up of the computer cannot be violated by such a Rule. In artificial life experiments such a Rule, together with an initial condition forms the a-life system (In real systems the Rule and the initial condition are not separated). Taken in itself such a system is just a set of symbols 0 and 1 which are first of all interpeted as numbers. These numbers are replaced by other numbers according to the Rule. As such this system does not embody movement and space, and consequently no dynamic spatial patterns and processes. But the system is geared to a further interpretation. This further interpretation is realized with the display devices of the computer. And now we can see the movements and the spatial relationships, and consequently the dynamic patterns. With respect to life on Earth these patterns can only be a simulation(of those earthly patterns), not that Earthly life itself. But these computer-generated dynamic patterns can themselves be another kind of life, in this case a computational kind of life, different from life as we know it. It is important to realize that there is probably not a sharp transition between inorganic structures and living structures (living structures not necessarily of the Earthly type) -- the latter only demand a certain degree of complexity, that allows for self-maintenance and reproduction. So there is no sharp boundary between certain complex inorganic structures and certain relatively simple organic structures. The intermediate forms (between inorganic and organic) have existed on Earth long ago, but after a certain brand of life had finally established itself, those intermediate forms (existing as relicts) could not maintain themselves much longer anymore, they were eaten by the existing living forms (maybe we could still find them at the volcanic vents at the bottom of the oceans where new crust material is being formed). The same phenomenon we observe with respect to intermediate forms between organic species.

So for computer-generated ' life ' to be evaluated (as either living or non-living), we will not have at our disposal a complete list of criteria, only some general features, which must in some way be present in something which is living. So the debate on the status of the products of Artificial Life will until now be fruitless. We have to await further developments in Artificial Life research.

I spoke about tracing -- conceptually -- the direct (virtual) history of an organism (whose Essence we want to assess in general terms), a history without all the contingencies, contingencies that actually occurred during its real history. How must we -- conceptually -- trace back this direct history? It seems that we must first trace back, starting from the complete organism back to the fertilized egg-cell from which it had ontogenetically developed, and then starting our virtual history from this fertilized egg-cell to the set of yet unorganized atoms. This implies a sequence of feasable chemical reactions, leading from this unorganized set of atoms to the fertilized egg-cell. Of course in the actual history the final synthesis of that egg-cell was accomplished via a huge number of (genetic) generations of organisms in the context of the evolutionary development via Self-organization, Mutation and Natural Selection, but this history is, as I noted already several times, is, from the viewpoint of the final structure of that particular organism, full of contingencies, and trial and error processes. So the virtual history, leading from the unorganized set of atoms to the egg-cell (of that particular organism), and from there to the adult organism (in fact all the way to its death) is composed of a non-random sequence of states, and such a sequence can in principle be described by a dynamical law. This law then is the Ultimate Dynamical Law, or Essence, of that organism. It is a law, determining a sequence of states. How, i.e. by what structures, are those states represented? Are they the (virtual) phenotypes or the (virtual) genotypes? It seems to me that they are the latter. The virtual history consists of the direct (as direct as chemically possible) synthesis of the particular DNA molecules present in the fertilized eggcell, the eggcell that now gives rise to the particular organism of which we want to assess its Essence. The accompanying phenotypes of the (virtual) generations that lead to that organism are in fact necessary conditions for that particular DNA to develop, but they are only conditions, and thus per accidens with respect to the structure of the final product, the DNA of the organism under investigation. That particular DNA is chemmically formed out of other DNA molecules, and these ultimately out of (collections of) atoms.

That part of the history, leading from the fertilized egg-cell onwards is largely already determined by the structure of that cell, especially its DNA. So whether the organism dies prematurely or not, will not affect the very structure of the dynamical law (which is already determined by a (significant) part of the (total) succession-series of states) and is per accidens. The penultimate law moreover is already written in that cell. All kinds of perturbations during the organism's life are thus per accidens with respect to its Essence.

Aspects of Information, Life Reality, and Physics

Whithin the Artificial Life Community one occasionally ponders about an ontological interpretation of artificial life creations within computers.

At the Second A-life Conference, Rasmussen, a Danish physicist, involved in artificial life, distributed an intentionally provocative crib sheet titled " Aspects of Information, Life Reality, and Physics." It contained seven statements, 1, 2, 3, 4, 5, 6, and 7, logically connected. I will reiterate these statements accompanied with some comments between [ ]. (See LEVY, p. 145) :

As LEVY remarks, the final proposition provided a wondrous justification for creating artificial universes.

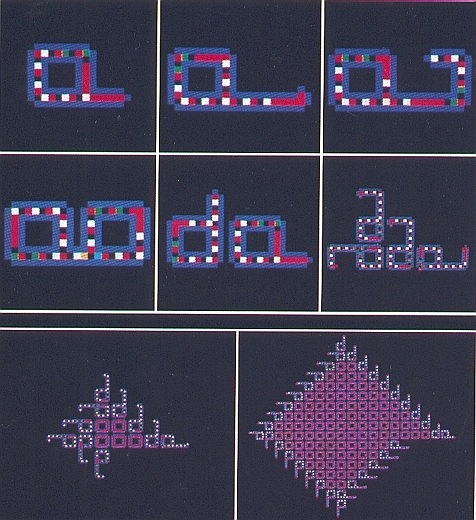

Figure 3. Chris Langton's cellular automaton ' loops ' reproduce in the spirit of life. Beginning from a single organism, the loops form a colony. As the loops on the outer fringes reproduce, the inner loops -- blocked by their daughters -- can no longer produce offspring. These dead progenitors provide a base for future generations' expansion, much like the formation of a coral reef. This self-organizing behavior emerges spontaneously, from the bottom up -- a key characteristic of artificial life.

( From LEVY, 1992, Artificial Life )

It is asserted that the ability and actual occurrence of open-ended evolution is a necessary characteristic of life. Indeed, this is true for organisms in order to be able to maintain themselves in the long run (i.e. to prolong themselves in future generations) in an ever changing environment. This is what has happened (and still happens) on the planet Earth.

It is however not a necessary characteristic of a living organism as far as its actual constitution is concerned. Most creatures of Artificial Life are indeed just some sort of Totalities that execute some biological functions, which can be summarized by (the function of) LIVING, at least an analogue of it. These creatures are constituted in a certain way, exhibiting a stronger or lesser degree of a SELF. They live in a stable environment. That does not make them less real. An on the long run stable or changing environment is ontologically per accidens with respect to their individual SELF, their Essence (i.e. what they are in themselves). Differently said, an on the long run stable or changing environment is ontologically , i.e. metaphysically -- remember that Metaphysics is about the (beingness of) the individual being -- per accidens with respect to its Essence, it is however physically (biologically) crucial, and consequently per se with repect to the maintenance of the organismic species (and beyond). A living individual organism can cope with the prevailing environment because of its very constitution. But it cannot normally survive when the environment changes drastically within its life-time. This it must accomplish by evolution , thus at the level of the species, or beyond. So in order to maintain itself in the long run, life creates the phenomenon of succeeding generations that slightly change and adapt to the changing environment. So only for a supra-individual maintenance of life, is its ability for open-ended evolution (in which fitness-criteria are determined by the changing environment) a necessary characteristic. An individual organism, insofar as individual, does not have to anticipate changes in the environment way out in the future. But for the maintanance of life as such, and with it the supra-individual maintenance of that organism, it must do so (which means : be so).

When we want to create something living (in a general sense), then the creation of a Totality with a SELF, and the ability to be born, grow, reproduce and die, could already be sufficient.

But to simulate life as we know it, is a different matter. In this case it must have an ability for open-ended evolution, in order to maintain itself super-individually under changing environments.

Such a simulation is accomplished by some a-life researchers, especially by Thomas RAY. His simulator is called Tierra (which means Earth). The creatures in Tierra do indeed have the ability for open-ended evolution. Open-ended evolution means that the system is not task-oriented but environment-oriented. In earthly life this environment is mainly its biotic part, the pure physical part is relatively insignificant. The same applies to artificial systems : The environment of a digital organism to cope with, is the collection of the other organic individuals, and this environment constantly changes because those organisms change (for example by mutations of their code). The artificial evolution is thereby subjected to the laws of Logic rather than those of physics and chemistry.

TIERRA itself is a parallel virtual computer (wihin a ' real ' computer, this is done for reasons of security : the digital organisms cannot escape and multiply over computer networks). Each digital organism has its own Central Processing Unit (CPU). Such an organism consists of a line of code (i.e. series of instructions), which is its genotype. The execution of that code can be interpreted as its phenotype. Just as earthly life consumes energy (that ultimately comes from the sun) in order to organize its material, digital life can be seen as a consumer of the main computational device in the computer, the CPU, and this CPU is used for a certain period of time to organize the memory of the computer. In TIERRA CPU-time (concerning the CPU of the virtual computer) is the analogue of energy resources, and the computer memory corresponds to the spatial resource. The computer memory, the CPU and the computer's operating system correspond to the abiotic environment, the self-replicating programs (in assembler code) correspond to the organisms. So the Tierran organims drew their energy from the virtual computer's Central Processing Unit and used that energy to power the equivalent of their own energy centers, virtual CPU's assigned to each organism.

TIERRA starts, not from the inoculation of one or another random structure -- i.e. it is not going to simulate the origin of some kind of life -- but its environment was inoculated by a full-fledged digital organism, capable of mutation and replication. The system is designed in such a way that a competitive situation arises with respect to CPU-time and memory resources. When run, the system will display open-ended evolution resulting in more and more efficient codes (representing the organisms). Organisms with smaller instruction-sets normally use less resources and consequently are fitter. But their instruction-sets must enable the digital organism to replicate, so they cannot be too short. The first organism that was inoculated into the Tierran environment, the Ancestor, had 80 instructions. Soon mutants appeared with shorter instruction-sets using less resources. The system had discovered more successful strategies to cope with their environment. The proceedings were tracked by means of a dynamic bar chart, which identified the types of organisms and the degree to which they proliferated in the ' soup'.

Then something very strange happened.

Let me quote LEVY, p. 222 :

"In the lower regions of the screen a bar began pulsing [ the length of the bar represents the amound of organisms of the relevant type ]. It represented a creature of only forty-five instructions! With so sparse a genome [ its instruction-set ], a creature could not self-replicate on its own in TIERRA -- the process required a minimal number of instructions, probably, Ray thought, in the low sixties. Yet the bar representing the population of forty-five instructions soon matched the size of the bar representing the previous most populous creature. In fact, the two seemed to be engaged in a tug-of-war. As one pulsed outward, the other would shrink, and vice versa.

It was obvious what had occurred. A providential mutation had formed a successful parasite. Although the forty-five instruction organism did not contain all the instructions necessary for replication, it sought out a larger, complete organism as a host and borrowed the host's replication code. Because the parasite had fewer instructions to execute and occupied less CPU time, it had an advantage over complete creatures and proliferated quickly. But the population of parasites had an upper limit. If too successful, the parasites would decimate their hosts, on whom they depended for reproduction. The parasites would suffer periodic catastrophes as they drove out their hosts.

Meanwhile, any host mutations that made it more difficult for parasites to usurp the replication abilities were quickly rewarded. One mutation in particular proved cunningly effective in " immunizing " potential hosts -- extra instructions that, in effect, caused the organism to " hide " from the attacking parasite. Instead of the normal procedure of periodically posting its location in the computer memory, an immunized host would forgo this step. Parasites depended on seeing this information in the CPU registers, and, when they failed to find it, they would forget their own size and location. Unable to find their host, they could not reproduce again, and the host would be liberated. However, to compensate for its failure to note its size and location in memory, the host had to undergo a self-examination process after every step in order to restore its own " self-concept ". That particular function had a high energy cost -- it increased the organism size and required more CPU time -- but the gain in fitness more than compensated. So strains of immunized hosts emerged and virtually wiped out the forty-five-instruction parasites.

This by no means meant the end of parasitism. Although those first invaders were gone, their progeny had mutated into organisms adapted to this new twist in the environment. This new species of parasite had the ability to examine itself, so it could " remember " the information that the host caused it to forget. Once the parasite recalled that information it could feast on the host's replication code with impunity. Adding this function increased the length of the parasite and cost it vital CPU time, but, again, the trade-off was beneficial."

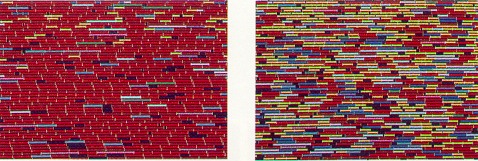

1 2

3 4

Figure 4. Evolutionary arms race between hosts and parasites in the TIERRA Synthetic Life program. Images were made using the Artificial Life Monitor (ALmond) program developed by Marc Cygnus. Each creature is represented by a bar. The color corresponds to genome size (e.g., red = 80, yellow = 45, blue = 79).

(1) Hosts, red, are very common. Parasites, yellow, have appeared but are still rare.

(2) Parasites have become very common. Hosts immune to parasites, blue, have appeared.

(3) Immune hosts now dominate memory, while parasites and susceptible hosts decline.

(4) The parasites will soon be driven to extinction.

( From COVENEY & HIGHFIELD, 1995, Frontiers of Complexity )

TIERRA also developed hyper-parasites, creatures that force other parasites to help them multiply, although they can reproduce in their own right. Also symbiotic -- cooperative -- behavior developed when each creature relied on at least one other to reproduce.

In most Artificial Life systems the phenotype is behavior, we don't normally see life-like material structures. These latter we see in L-systems. These systems I treated of in a separate Essay, L-systems , because L-systems do not show behavior in the strict sense, but simulate one important biological function, namely morphological construction. The reader is referred to the Essay mentioned.

Plant05

Number of iterations 8

Axiom X

Rules :

X = F[+X][-X]FX

F = FF

Angle = 14

Output (Figure 5.) :

Figure 5.

Plant05

Number of iterations 10

Axiom X

Rules :

X = F[+X][-X]FX

F = FF

Angle = 14

Output (the effect of more iterations is clearly observable)(Figure 6.) :

Figure 6.

Plant08

Number of iterations 8

Axiom SLFFF

Rules :

S = [+++Z][---Z]TS

Z = +H[-Z]L

H = -Z[+H]L

T = TL

L = [-FFF][+FFF]F

Angle = 20

Output (Figure 7.):

Figure 7.

Plant08

Number of iterations 10

Axiom SLFFF

Rules :

S = [+++Z][---Z]TS

Z = +H[-Z]L

H = -Z[+H]L

T = TL

L = [-FFF][+FFF]F

Angle = 20

Output (Figure 8.):

Figure 8.

Beautiful images can be created with Laurens Lparser, a sophisticated L-system program and associated viewer (click on the small images):

Figure 9.

Figure 9.

Here is a fractal fern type leaf on a nice reflecting background. Using the -i option with the Lparser generates a series of linked cylinder shapes.

Figure 10.

Figure 10.

Here is a series of mutations based on a flower shape. Using polygons together with the basic F elements.

Figure 11.

Figure 11.

These 4 images demonstrate the principle of mutation. The mother shape is at top left and the rest are her offspring. The children are clearly descendant from the basic shape but their differences increase with the number of mutations performed on the original Lsystem.

Another interesting a-life creation are Boids.

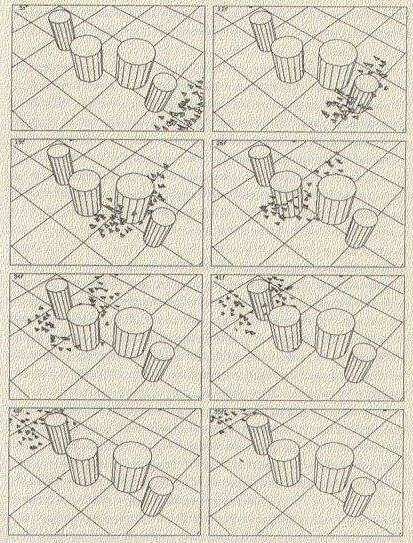

Figure 12. A flock of Craig Reynolds's " boids " confront a cluster of pillars. Since their behavior is emergent, it was not clear even to Reynolds how the columns would affect the flight of the boids. As it happened, the flock was undaunted -- it temporarily split into two flocks and then reunited.

(From LEVY, 1992, Artificial life)

" This is the telescoping of an obvious truism -- any simulation of something cannot be the same as the object it simulates -- to a general criticism of the methodology of simulation. Even those enthusiastic about the Weak Claim to a-life [ 'Weak Artificial Life ' only claims that its creations are simulations of living things or aspects of living things, they themselves are not claimed alive by that fact ], like Howard H. Pattee, have warned that its practitioners should resist the temptation to assume that fascinating results of computer experiments had relevance to the physical world. But in practice a-life frees itself from that dilemma by insisting that, although, indeed, a computer experiment is by no means equivalent to something it may be modeled on, it certainly is something. Maps are not the territory, but maps are indeed territories.This brings us back to the evaluation of the nature of computer viruses.

The methodology of a-life also shatters a related objection, that computer experiments, by their deterministic nature, can never attain the characteristics of true living systems. According to this argument, life cannot emerge from a mere execution of algorithms : in the natural world any number of chance occurrences contributed to the present biological complexity. But random events are indeed well integrated into artificial life. Von Neumann himself proposed, although he did not have the chance to design, a probabilistic version of his self-reproducing cellular automaton, which obviated the deterministic nature of the previous version. Many experiments in a-life, especially those that simulate evolution, include a step where random events make each iteration unpredictable. In any case, even in deterministic systems such as the CA game, Life [ ' Life is a famous two-dimensional Cellular Automaton that displays very complex behavior ], the cacophony of variables is sufficiently complex that the system can yield unbidden, or emergent, behavior."

Life (as defined by Farmer & Belin)

This is a pretty broad definition of Life, but this -- which I have already emphasized above -- should be so, in order not to confine such a definition to the form of Life that we happen to encounter here on Earth. Furthermore the list must not be seen as something definitive, that rigourously and exclusively characterizes Life.

It could be interesting to accompany this list with the intentions and ideas of the new research program Artificial Life in its strongest and most ambitious form. Seven such ideas are cited by EMMECHE in his book The Garden in the Machine, 1996, p. 17-20 :

The bottom-up method in artificial life imitates or simulates processes in nature that organize themselves. We might also call these processes " simulated self-organization".

All this kind of research is directed to an understanding of living systems in general. Its procedures and results directly evokes fundamental questions, involving ontology, origin of life, consciousness, the nature of computation, security, and even morality. As any other science it should be done with caution, theoretically as well as practically. The existence of computer viruses already teaches us about those two aspects. Although the new science is now well under way it is just the beginning of a series of staggering creations that cannot be denied the status of aliveness anymore.